Global Digital Pathology Leader Further Expands Its Patents Portfolio Enabling New Forms of Image Query

Vista, CA – April 28, 2009 – Aperio Technologies, Inc., (Aperio), a global leader in digital pathology for the healthcare and life sciences industry, announced today that the United States Patent and Trademark Office has issued the company patent No. 7,502,519, covering systems and methods for image pattern recognition using vector quantization (VQ). This is Aperio’s second patent on the use of VQ for pattern recognition applications.

As pathology labs, hospitals, biopharma companies and educational institutions increasingly adopt digital pathology, they generate vast libraries of digital slides that play a critical role in disease management, medical research, and education. These libraries have historically been indexed for access with text-based labels such as tissue type, patient age, or primary diagnosis.

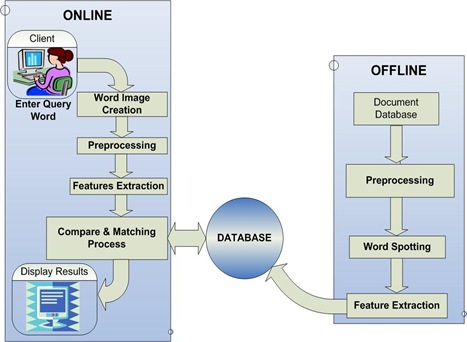

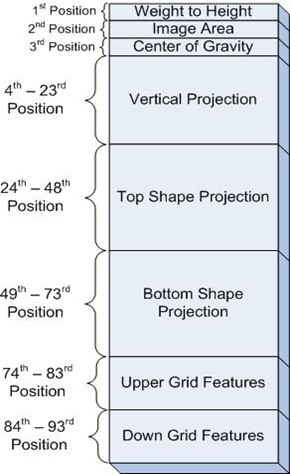

Now, Aperio’s VQ technology enables content-based image retrieval (CBIR) to allow pathologists and researchers to search libraries of digital slides using image data, and to efficiently retrieve similar images from a large image archive. The ability to search image archives using image regions of interest in addition to text-based searches represents a significant advancement in image query.

Vector quantization is a breakthrough technology providing a novel way to perform content-based image retrieval,” said Dirk Soenksen, CEO of Aperio. “The image pattern recognition technology covered by this patent is unique in that it does not rely on prior knowledge of image-based features, but involves statistical comparisons to imagery data that exhibit characteristics of interest.”

In addition to providing an efficient way to search large libraries of digital slides for image regions that match a given image, vector quantization also allows searching for exceptions, such as regions of an image which are different from previously characterized images.

Aperio’s patent portfolio encompasses all of the elements that comprise a digital pathology system, including digital slide creation, data management, advanced visualization, and image analysis. Aperio holds over 30 issued patents and pending patent applications world-wide and is the digital pathology leader in the global market with an installed base of more than 500 systems in 32 countries.