Article from http://quantombone.blogspot.gr/2012/06/predicting-events-in-videos-before-they.html

Intelligence is all about making inferences given observations, but somewhere in the history of Computer Vision, we (as a community) have put too much emphasis on classification tasks. What many researchers in the field (unfortunately this includes myself) focus on is extracting semantic meaning from images, image collections, and videos. Whether the output is a scene category label, an object identity and location, or an action category, the way we proceed is relatively straightforward:

-

Extract some measurements from the image (we call them "features", and

SIFTand

HOG are two very popular such features)

-

Feed those features into a machine learning algorithm which predicts the category these features belong to. Some popular choices of algorithms are Neural Networks, SVMs, decision trees, boosted decision stumps, etc.

-

Evaluate our features on a standard dataset (such as Caltech-256, PASCAL VOC, ImageNet,

LabelMe, etc)

-

While only in the last 5 years has action recognition become popular, it still adheres to the generic machine vision pipeline. But let's consider a scenario where adhering to this template can hav disastrous consequences. Let's ask ourselves the following question:

Q: Why did the robot cross the road?

A: The robot didn't cross the road -- he was obliterated by a car. This is because in order to make decisions in the world you can't just wait until all observations happened. To build a robot that can cross the road, you need to be able to predict things before they happen! (Alternate answer: The robot died because he wasn't using Minh's early-event detection framework, the topic of today's blog post.)

This year's Best Student Paper winner at CVPR has given us a flavor of something more,something beyond the traditional action recognition pipeline, aka "early event detection." Simply put, the goal is to detect an action before it completes. Minh's research is rather exciting, which opens up room for a new paradigm in recognition. If we want intelligent machines roaming the world around us (and every CMU Robotics PhD student knows that this is really what vision is all about), then recognition after an action has happened will not enable our robots to do much beyond passive observation. Prediction (and not classification) is the killer app of computer vision because classification assumes you are given the data and prediction assumes there is an intent to act on and interpret the future.

While Minh's work focused on simpler actions such as facial recognition, gesture recognition, and human activity recognition, I believe these ideas will help make machines more intelligent and more suitable for performing actions in the real world.

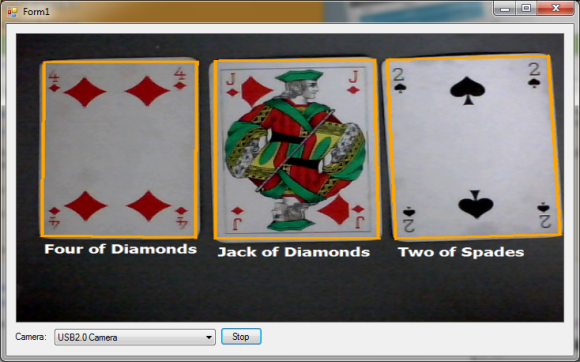

Disgust detection example from CVPR 2012 paper

To give the vision hackers a few more details, this framework uses Structural SVMs (NOTE: trending topic at CVPR) and is able to estimate the probability of an action happening before it actually finishes. This is something which we, humans, seem to do all the time but has been somehow neglected by machine vision researchers.

Max-Margin Early Event Detectors.

Hoai, Minh & De la Torre, Fernando

CVPR 2012

Abstract:

The need for early detection of temporal events from sequential data arises in a wide spectrum of applications ranging from human-robot interaction to video security. While temporal event detection has been extensively studied, early detection is a relatively unexplored problem. This paper proposes a maximum-margin framework for training temporal event detectors to recognize partial events, enabling early detection. Our method is based on Structured Output SVM, but extends it to accommodate sequential data. Experiments on datasets of varying complexity, for detecting facial expressions, hand gestures, and human activities, demonstrate the benefits of our approach. To the best of our knowledge, this is the first paper in the literature of computer vision that proposes a learning formulation for early event detection.

Early Event Detector Project Page (code available on website)

Minh gave an excellent, enthusiastic, and entertaining presentation during day 3 of CVPR 2012 and was definitely one of the highlights of that day. He received his PhD from CMU's Robotics Institute (like me, yipee!) and is currently a Postdoctoral research scholar in Andrew Zissermann's group in Oxford. Let's all congratulate Minh for all his hard work.

Article from http://quantombone.blogspot.gr/2012/06/predicting-events-in-videos-before-they.html

A side mirror that eliminates the dangerous “blind spot” for drivers has now received a U.S. patent. The subtly curved mirror, invented by Drexel University mathematics professor

A side mirror that eliminates the dangerous “blind spot” for drivers has now received a U.S. patent. The subtly curved mirror, invented by Drexel University mathematics professor