Article from: http://multimediacommunication.blogspot.gr/2013/05/multiple-sensorial-mulsemedia-multi.html

Call for Papers

ACM Transactions on Multimedia Computing, Communications, and Applications (TOMCCAP)

Multiple Sensorial (MulSeMedia) Multi-modal Media: Advances and Applications

Multimedia applications have primarily engaged two of the human senses – sight and hearing. With recent advances in computational technology, however it is possible to develop applications that also consider, integrate and synchronize inputs across all senses, including tactile, olfaction, and gustatory. This integration of multiple senses leads to a paradigm shift towards a new mulsemedia (multiple sensorial media) experience, aligning rich data from multiple human senses. Mulsemedia brings with itself new and exciting challenges and opportunities in research, industry, commerce, and academia. This special issue solicits contributions dealing with mulsemedia in all of these areas. Topics of interest include, but are not limited to, the following:

- Context-aware Mulsemedia

- Metrics for Mulsemedia

- Capture and synchronization of Mulsemedia

- Mulsemedia devices

- Mulsemedia in distributed environments

- Mulsemedia integration

- Mulsemedia user studies

- Multi-modal mulsemedia interaction

- Mulsemedia and virtual reality

- Quality of service and Mulsemedia

- Quality of experience and Mulsemedia

- Tactile/haptic interaction

- User modelling and Mulsemedia

- Mulsemedia and e-learning

- Mulsemedia and e-commerce

- Mulsemedia Standards

- Mulsemedia applications (e.g. e-commerce, e- learning, e-health, etc)

- Emotional response (e.g. EEG) of Mulsemedia

- Mulsemedia sensor research

- Mulsemedia databases

Important Dates

- Paper Submission: 14/10/2013

- First Decision: 13/01/2014

- Paper Revision Submission: 03/03/2014

- Second Decision: 28/04/2014

- Accepted Papers Due: 12/05/2014

Guest Editors

- George Ghinea (Brunel University, UK)

- Stephen Gulliver (University of Reading, UK)

- Christian Timmerer (Alpen-Adria-Universität, Klagenfurt, Austria)

- Weisi Lin (Nanyang Technological University, Singapore)

Submission Procedure

All submission guidelines of TOMCCAP, such as formatting, page limits and extensions of previously-submitted conference papers, must be adhered to. Please see the Authors Guide section of the TOMCCAP website for more details (http://tomccap.acm.org). To submit please follow these instructions:

- Submit your paper through TOMCCAP’s online systemhttp://mc.manuscriptcentral.com/tomccap. When submitting please use the Manuscript Type ‘Special Issue: MulSeMedia’ in the ManuscriptCentral system.

- In your cover letter, include the information “Special Issue on Mulsemedia” and, if submitting an extended version of a conference paper, explain how the new submission is different and extends previously published work.

- After you submit your paper, the system will assign a manuscript number to it. Please email this number to guesteditors2014@kom.tu-darmstadt.de together with the title of your paper.

The paper “

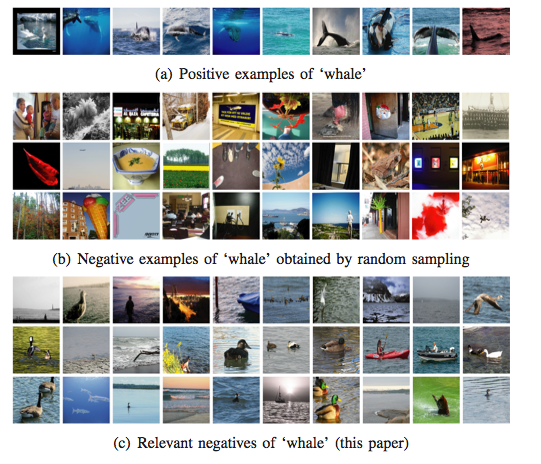

The paper “ The GRire library is an open source, light-weight framework for implementing CBIR (Content Based Image Retrieval) methods. It contains various image feature extractors, descriptors, classifiers, databases and other necessary tools. Currently, the main objective of the project is the implementation of the BOVW (Bag of Visual Words) approach so, apart from the image analysis tools, to offer methods from the field of IR (Information Retrieval), e.g. weighting models such as SMART and Okapi, adjusted to meet the Image Retrieval perspective.

The GRire library is an open source, light-weight framework for implementing CBIR (Content Based Image Retrieval) methods. It contains various image feature extractors, descriptors, classifiers, databases and other necessary tools. Currently, the main objective of the project is the implementation of the BOVW (Bag of Visual Words) approach so, apart from the image analysis tools, to offer methods from the field of IR (Information Retrieval), e.g. weighting models such as SMART and Okapi, adjusted to meet the Image Retrieval perspective.