Article From http://www.theregister.co.uk/2009/07/09/more_july_apple_patents/

The US Patent and Trademark Office published 33 new Apple patent applications on Thursday, bringing the total filed in July to 55 - and we're not even a third of the way through the month.

Today's cluster of creativity ranged from flexible cabling to scrolling lyrics, but the bulk of the filings described new powers for the ubiquitous iPhone and its little brother, the iPod touch - especially when the 'Pod is equipped with a camera, which it seems destined to be.

Two of the filings are directly camera-related. One focuses on object identification and the other on face recognition. The former is targeted specifically for handhelds, while the latter's reach extends both into your pocket and out to the entire universe of consumer electronics.

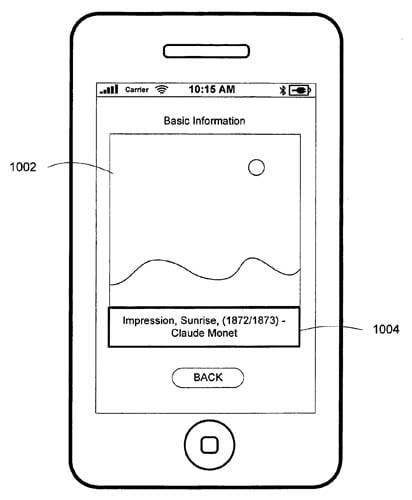

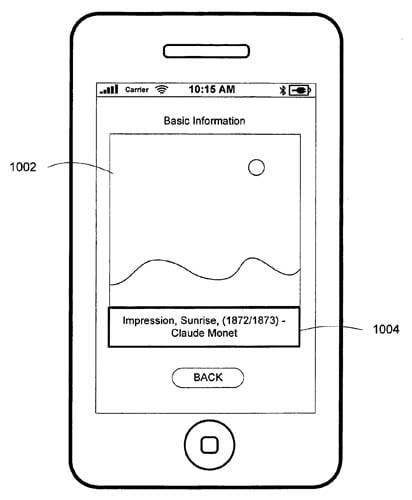

The object-identification filing describes a system in which a handheld's camera captures an image of an object either in visual light or infrared, then compares that image with information stored over a network. The network then asks the you what information about that object you'd like to download, then provides it.

The filing also describes the system using an RFID reader rather than a camera, but the detect-compare-download sequence remains the same.

Apple uses a museum visit to illustrate the utility of this technology: You could simply point your iPhone at a work of art and quickly be presented with info about its artist, genre, provenance, and the availability of T-shirts featuring that work in the museum store - which another of Thursday's filings, on online shopping, could help you buy.

Tapping into a handheld's GPS and digital compass could also enable the system to provide location-based resources - the filing suggests a "RESTAURANT mode" to help you find east in Vegas - and to support the captioned landscapes provided by augmented reality that are getting so much press lately.

Claude Monet at his most minimalist, captured and ID-ed by your iPhone

Finally, the filing includes a way for you to capture a log of all the identified objects, complete with downloaded multimedia content for creating a record of your peregrinations. Look for such a media-rich slideshow to appear in some student's "How I Spent My Summer Vacation" school assignment.

The face-recognition filing describes two different system: one that merely checks for a face - any face - and a second that matches what it sees with a database of whom it knows.

The system that doesn't care who you are is merely looking to see if anyone's using it - and if someone is, it won't time out, as would an iPhone, or fire up a screensaver, as would a PC. The system that knows you by your dashing good looks would also be aware of your privileges level, and allow access to its services based on that level.

Apple, as usual in its patent applications, isn't shy about the scope of this technology. It list 37 different devices that could incorporate it, from personal communications devices to vehicle operating systems to automatic teller machines. Then just in case it forgot anything, adds "any like computing device capable of interfacing with a person."

That should just about cover it.

![googlechromew[1] googlechromew[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhgjtg0SmfqKVz10Dj72MtPEK3G9-bMqeOkW3ZpCE5pT-kmfjfa-koXowcaqYsk9qZxFDVOB8JwY6pwENP8iSlKPJC55uhtVeFsDDtQs_B9pjcIeq03S9sSOwHJYYR-F_qjJXSwAZZzSzA/?imgmax=800)