Wednesday, December 15, 2010

Two papers at ECIR2011 accepted (one oral, one poster)

1.Fusion vs Two-Stage for Multimodal Retrieval

Abstract. We compare two methods for retrieval from multimodal collections. The first is a score-based fusion of results, retrieved visually and textually. The second is a two-stage method that visually re-ranks the top-K results textually retrieved. We discuss their underlying hypotheses and practical limitations, and contact a comparative evaluation on a standardized snapshot of Wikipedia. Both methods are found to be significantly more effective than single-modality baselines, with no clear winner but with different robustness features. Nevertheless,

two-stage retrieval provides efficiency benefits over fusion.

2.Dynamic Two-Stage Image Retrieval from Large Multimodal Databases

Abstract. Content-based image retrieval (CBIR) with global features is notoriously noisy, especially for image queries with low percentages of relevant images in a collection. Moreover, CBIR typically ranks the whole collection, which is inefficient for large databases. We experiment with a method for image retrieval from multimodal databases, which improves both the effectiveness and efficiency of traditional CBIR by exploring secondary modalities. We perform retrieval in a two-stage fashion: first rank by a secondary modality, and then perform CBIR only on the top-K items. Thus, effectiveness is improved by performing CBIR on a ‘better’ subset. Using a relatively ‘cheap’ first stage, efficiency is also improved via the fewer CBIR operations performed. Our main novelty is that K is dynamic, i.e. estimated per query to optimize a predefined effectiveness measure. We show that such dynamic two-stage setups can be significantly more effective and robust than similar setups with static thresholds previously proposed.

Friday, December 10, 2010

Using Sketch Recognition and Corrective Feedback to Assist a User in Drawing Human Faces (Dixon)

Read the original article

iCanDraw is the first application that uses Sketch Recognition to assist the user in learning how to draw. Although most of the algorithms and techniques of this paper are not new there is a major contribution in opening a new field of application for sketch recognition. They show sketch recognition can have great use in this kind of applications. The results going through 2 iterations of the application reveal that such application is feasible, and although much more studies have to be done to prove this is an efficient teaching tool, the end-to-end system is now available to begin such studies. Another important result of the paper is the set of design principles obtained from the user study in this kind of applications for assisted drawing using free sketch. For the implementation of the application the user interface is remarkably well achieved. After a first iteration and a deep analysis of it, many mistakes or weaknesses were detected and corrected such that the final version of this interface is very user oriented and can give a more much effective teaching experience. Each face template goes through a face recognizer to extract its most prominent features, and then some hand corrections are done to finally get to a template of the ideal face sketch. The recognition then is mostly template matching oriented. Some gesture recognition is also used as part of the interface for actions such as erasing or undoing.

For the implementation of the application the user interface is remarkably well achieved. After a first iteration and a deep analysis of it, many mistakes or weaknesses were detected and corrected such that the final version of this interface is very user oriented and can give a more much effective teaching experience. Each face template goes through a face recognizer to extract its most prominent features, and then some hand corrections are done to finally get to a template of the ideal face sketch. The recognition then is mostly template matching oriented. Some gesture recognition is also used as part of the interface for actions such as erasing or undoing.

Discussion

The work presented opens a very interesting field of application to sketch recognition. In the sketch recognition class a project about how to draw an eye is one of the possible descendants of this project. I think one of the mayor challenges in this field is to determine the appropriate amount and quality of the feedback given to the user. If the user is forced to draw too close to the template the experience can be frustrating, but if it is too loose the improvement in drawing might be poor, a solution might be having several difficulty level in different lessons.

Thursday, December 9, 2010

My Defense Online

Monday, December 6, 2010

1st ACM International Conference on *Multimedia Retrieval* - Call for Multimedia Retrieval Demonstrators

The First ACM International Conference on Multimedia Retrieval (ICMR), puts together the long-lasting experience of former ACM CIVR and ACM MIR. It is the ideal forum to present and encounter the most recent developments and applications in the area of multimedia content retrieval. Originally set up to illuminate the state-of-the-art in image and video retrieval, ICMR aims at becoming the world reference event in this exciting field of research, where researchers and practitioners can exchange knowledge and ideas.

ICMR 2011 is accepting proposals for technical demonstrators that will be showcased during the conference. The session will include demonstrations of latest innovations by research and engineering groups in industry, academia and government. ICMR 2011 is seeking original high quality submissions addressing innovative research in the broad field of multimedia retrieval. Demonstrations can be related to any of the topics defined by ICMR as shown in the call for papers. The technical demonstration showcase will run concurrently with regular ICMR sessions in the poster area.

Papers must be formatted according to the ACM conference style. Papers must not exceed 2 pages in 9 point font and must be submitted as pdf files. While submitting the paper, please make sure that the names and affiliations of the authors are included in the document. Either the Microsoft Word or LaTeX format are accepted. The paper templates can be downloaded directly from the ACM website at http://www.acm.org/sigs/publications/proceedings-templates

All demo submissions will be peer-reviewed to ensure maximum quality and accepted demo papers will be included in the conference proceedings. The best demo will be awarded and announced during the Social Event.

April 17-20 2011, Trento, Italy

TOP-SURF

TOP-SURF is an image descriptor that combines interest points with visual words, resulting in a high performance yet compact descriptor that is designed with a wide range of content-based image retrieval applications in mind. TOP-SURF offers the flexibility to vary descriptor size and supports very fast image matching. In addition to the source code for the visual word extraction and comparisons, we also provide a high level API and very large pre-computed codebooks targeting web image content for both research and teaching purposes.

Authors

Bart Thomee, Erwin M. Bakker and Michael S. Lew

Licenses

The TOP-SURF descriptor is completely open source, although the libraries it depends on use different licenses. As the original SURF descriptor is closed source, we used the open source alternative called OpenSURF, which is released under the GNU GPL version 3 license. OpenSURF itself is dependent on OpenCV that is released under the BSD license. Furthermore we used FLANN for approximate nearest neighbor matching, which is also released under the BSD license. To represent images we used CxImage, which is released under the zlib license. Our own code is licensed under the GNU GLP version 3 license, and also under the Creative Commons Attribution version 3 license. The latter license simply asks you give us credit whenever you use our library. All the aforementioned open source licenses are compatible with each other.

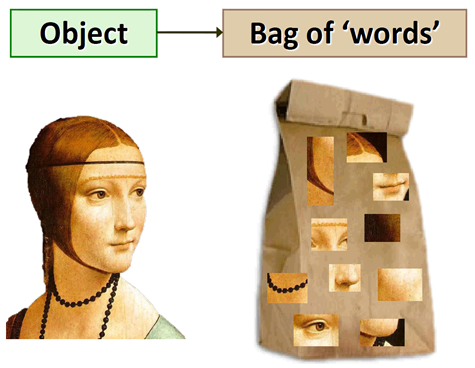

Figure 1. Visualizing the visual words.

Figure 2. Comparing the descriptors of several images using cosine normalized difference, which ranges between 0 (identical) and 1 (completely different). The first image is the original image, the second is the original image but significantly changed in saturation, the third image is the original image but framed with black borders and the fourth image is a completely different one. Using a dictionary of 10,000 words, the distance between the first and second images is 0.42, the distance between the first and the third is 0.64 and the distance between the first and the fourth is 0.98. We have noticed that a (seemingly high) threshold of around 0.80 appears to be able to separate the near-duplicates from the non-duplicates, although this value requires more validation.

Saturday, December 4, 2010

17th INTERNATIONAL CONFERENCE ON DIGITAL SIGNAL PROCESSING

6-8 July 2011, Corfu Island, Greece

The 2011 17th International Conference on Digital Signal Processing (DSP2011), the longest in existence Conference in the area of DSP (http://www.dsp-conferences.org/), organized in cooperation with the IEEE and EURASIP, will be held in July 6-8, 2011 on the island of Corfu, Greece.

DSP2011 addresses the theory and application of filtering, coding, transmitting, estimating, detecting, analyzing, recognizing, synthesizing, recording, and reproducing signals by means of digital devices or techniques. The term "signal" includes audio, video, speech, image, communication, geophysical, sonar, radar, medical, musical, and other signals.

The program will include presentations of novel research theories / applications / results in lecture, poster and plenary sessions. A significant number of Special Sessions organised by internationally recognised experts in the area, will be held (http://www.dsp2011.gr/special-sessions).

Topics of interest include, but are not limited to:

- Adaptive Signal Processing

- Array Signal Processing

- Audio / Speech / Music Processing & Coding

- Biomedical Signal and Image Processing

- Digital and Multirate Signal Processing

- Digital Watermarking and Data Hiding

- Geophysical / Radar / Sonar Signal Processing

- Image and Multidimensional Signal Processing

- Image/Video Content Analysis

- Image/Video Indexing, Search and Retrieval

- Image/Video Processing Techniques

- Image/Video Compression & Coding Standards

- Information Forensics and Security

- Multidimensional Filters and Transforms

- Multiresolution Signal Processing

- Nonlinear Signals and Systems

- Real-Time Signal/Image/Video Processing

- Signal and System Modelling

- Signal Processing for Smart Sensor & Systems

- Signal Processing for Telecommunications

- Social Signal Processing and Affective Computing

- Statistical Signal Processing

- Theory and Applications of Transforms

- Time-Frequency Analysis and Representation

- VLSI Architectures & Implementations

Prospective authors are invited to electronically submit the full camera-ready paper (http://www.dsp2011.gr/submitpapers). The paper must not exceed six (6) pages, including figures, tables and references, and should be written in English. Faxed submissions are not acceptable.

Papers should follow the double-column IEEE format. Authors should indicate one or more of the above listed categories that best describe the topic of the paper, as well as their preference (if any) regarding lecture or poster sessions. Lecture and poster sessions will be treated equally in terms of the review process. The program committee will make every effort to satisfy these preferences. Submitted papers will be reviewed by at least two referees and all accepted papers will be published in the Conference Proceedings (CD ROM) and will be available in IEEExplore digital library. In addition to the technical program, a social program will be offered to the participants and their companions.

It will provide an opportunity to meet colleagues and friends against a backdrop of outstanding natural beauty and rich cultural heritage in one of the best known international tourist destinations.

Wednesday, December 1, 2010

Image Processing in Windows Presentation Foundation (.NET Framework 3.0)

Many image processing researchers which they develop on .NET/C# they still stuck in GDI+. That means, they still using the unsafe bracket and by pointers they get the image information. This has changed since the introduction of the Windows Presentation Foundation (WPF) for .NET Framework 3.0 and beyond. In this blog post, we will discuss how to open and process an image using the WPF tools. Let's consider that the image.png is a 32bits/pixel color image. To access the image pixels:

PngBitmapDecoder myImage = new PngBitmapDecoder(new Uri("image.png"), BitmapCreateOptions.DelayCreation, BitmapCacheOption.OnLoad);

byte[] myImageBytes = new byte [myImage.Frames[0].PixelWidth * 4 * myImage.Frames[0].PixelHeight];

myImage.Frames[0].CopyPixels(myImageBytes, myImage.Frames[0].PixelWidth * 4, 0);

At first line, a image object is created by using the pngbitmapdecoder class. The myImage.Frames collection holds the image information. In this example, an image is opened so the collection is equal to 1 and the picture is accessed by the myImage.Frames[0].

Then a byte array is created which it will hold the image pixels information. The CopyPixels function of the Frames[0] is used to get the pixels of the opened image. In this particular example because the image format is Bgra32, the array byte size is equal to 4*Width*Height. In general, the ordering of bytes in the bitmap corresponds to the ordering of the letters B, G, R, and A in the property name. So in Bgra32 the order is Blue, Green, Red and Alpha. Generally, the image format for the image is accessed by myImage.Frames[0].Format and the palette (if the image is using one) by myImage.Frames[0].Palette.

To manipulate the image pixels in order to create a greyscale image:

int Width = myImage.Frames[0].PixelWidth;

int Height = myImage.Frames[0].PixelHeight;

for (int x = 0; x < Width; x++)

{

for (int y = 0; y < Height; y++)

{

int r = myImageBytes[4 * x + y * (4 * Width) + 2];

int g = myImageBytes[4 * x + y * (4 * Width) + 1];

int b = myImageBytes[4 * x + y * (4 * Width) + 0];

int greyvalue = (int)(0.3 * r + 0.59 * g + 0.11 * b);

myImageBytes[4 * x + y * (4 * Width) + 2] = (byte)greyvalue;

myImageBytes[4 * x + y * (4 * Width) + 1] = (byte)greyvalue;

myImageBytes[4 * x + y * (4 * Width) + 0] = (byte)greyvalue;

}

}

Finally, to create a new image object from the byte array and save it:

BitmapSource myNewImage = BitmapSource.Create(Width, Height, 96, 96, PixelFormats.Bgra32, null, myImageBytes, 4 * Width);

BmpBitmapEncoder enc = new

BmpBitmapEncoder();

enc.Frames.Add(BitmapFrame.Create(myNewImage));

FileStream fs = newFileStream("newimage.png", FileMode.Create);

enc.Save(fs);

fs.Close();

A BitmapSource object is created with the corresponding Width, Height and PixelFormat. Then a bmp encoder object is created to save the image as bmp format. A frame is added and the encoder save the image to the corresponding stream.

These programming tools for image manipulation are only a subset of those that they are available in WPF. For example is the WriteableBitmap Class which it is not an immutable object, suitable for dynamic images.

Dr Konstantinos Zagoris (email: kzagoris@gmail.com, personal web site: http://www.zagoris.gr) received the Diploma in Electrical and Computer Engineering in 2003 from Democritus University of Thrace, Greece and his phD from the same univercity in 2010. His research interests include document image retrieval, color image processing and analysis, document analysis, pattern recognition, databases and operating systems. He is a member of the Technical Chamber of Greece.

Tuesday, November 30, 2010

Research Fellow - Image Retrieval

Research Fellow - Image Retrieval

Centre for Interactive Systems Research, City University London

This post offers the opportunity to work on an EPSRC-TSB funded project Piclet. The project will create a platform for image retrieval, share, use, and recommendation. It will provide users with an environment for interactive image retrieval with possibilities for buying and selling images. The project considers the image information seeking and retrieval behaviour of different user groups including professionals. Partners from industry include an SEO (search engine optimisation) company and a media advertising company.

In this post you will develop novel image retrieval algorithms based on content-based techniques, text retrieval, and user's context. You will implement and integrate these in an image retrieval system. The project will make use of current state-of-art image retrieval techniques, collections, relevant open-source initiatives, and evaluation methods. You will be involved in integrating these, creating user interfaces, and developing new system functionality where necessary.

Applicants should be qualified in Information Retrieval (or related area).

More specifically, text and image retrieval techniques will be needed. The qualification sought is PhD or equivalent experience in field. Strong programming skills are essential, and experience of any of the following areas is an advantage: Information Retrieval (IR); User-centred IR system design, implementation, and evaluation; Context learning, Adaptive IR, Recommender systems, Social media; User Interface design and evaluation.

Experience in collaborating with partners from industry is desirable.

For informal enquiries, please contact Dr. Ayse Goker:

Closing Date: 10 December, 2010

Details available via http://tinyurl.com/338ajpf (The post will also be advertised on www.jobs.ac.uk )

Monday, November 29, 2010

Public Thesis Defense–Invitation

My thesis has officially passed committee and, after a final check-the-commas read-through, will be on its way to the external examiners.

December 09, 10:00, Democritus University of Thrace

If you are, or will be, in Xanthi, it would be great if you could join in person. If you are not, there will be a Skype conference call, with preference given to research participants who would like to listen in. Please email me to reserve a slot.

Abstract – Introduction

This chapter lists the goals and the contribution of the current thesis. The goals which were set up at the beginning of this work and which were adjusted during the process are:

- The creation of a new family of descriptors which will combine more than one low levels feature in a compact vector, and which will have the ability to be incorporated in the pre-existing MPEG-7 standard. The descriptors will be constructed via intelligent techniques.

- The creation of a method for accelerating the searching procedure.

- The investigation of several Late Fusion methods for image retrieval.

- The creation of methods which will allow the use of the proposed descriptors in distributed image databases.

- The development of a software which will contain a great amount of descriptors proposed in the literature.

- The development of open source libraries which will utilize the proposed descriptors as well as the MPEG-7 descriptors.

- The creation of a new method for encrypting images which will utilize features and parameters from the image retrieval field.

- The creation of a new method and system implementation which will employ the proposed descriptors in order to achieve video summarization.

- The creation of a new method and system implementation for image retrieval based on``Keywords'' which will be automatically generated via the use of the proposed descriptors.

- Finally, the creation of a new method and system implementation for multi-modal search. The system will utilize both low level elements(which will originate from the proposed descriptors) as well as high level elements (which will originate from keywords which will accompany the images).

Thesis Details

In the past few years there has been a rapid increase in the field of multi-media data, mostly due to the evolution of information technology. One of the main components of multi-media data is that of visual multi-media data, which includes digital images and video. While the issue of producing, compressing and propagating such media might have been a subject of scientific interest for a long time, in the past few years, exactly due to the increase in the range of data, a large part of the research was turned towards the management of retrieval of such materials.

The first steps in automated management and retrieval of visual multi-media, can be traced back to 1992, where the term Content Based Retrieval}was initially used. Since then, a new research field was created, which, approximately 20 years later, still remains active. And while initially this field of research seemed to be a research element classified under the general spectrum of information retrieval, as the years progressed, this research objective, has managed to attract scientists from various disciplines.

Even though there are a large number of scientists which occupy themselves with this field, no satisfactory and widely accredited solution to the problem has been proposed. The second Chapter of this thesis outlines a brief overview of the Fundamentals of Content-Based Image Retrieval.

During the course of this thesis, a study carried out that describes the most commonly used methods for retrieval evaluation and notes their weaknesses. It also proposes a new method of measuring the performance of retrieval systems and an extension of this method so that during the evaluation of retrieval results the parameters describing both the size of the database in

which the search is being executed as well as the size of the ground truth of each query are taken into account. The proposed method is generic and can be used for evaluating the retrieval performance of any type of information. This work is described in details in Chapter 3.

The core of the method proposed in this thesis is incorporated into the second thematic unit. This section includes a number of low level descriptors, whose features originate from the content of multi-media data which they describe. In contrast to MPEG-7, each type of multi-media data will be described by a specific group of descriptors. The type of material will be determined by the content it describes. The descriptors created originate from fuzzy methods and are characterized by their low storage requirements (23-72 bytes per image). Moreover, each descriptor combines the structure of more than one features (ie color and texture). This attribute classifies them as composite descriptors. The sum of descriptors which are incorporated into the second thematical unit of the thesis can be described by the general term Compact Composite Descriptors.

In its entirety, the second thematic unit of the thesis contains descriptors for the following types of multi-media material:

- Category 1: Images/ Video with natural content

- Category 2: Images/ Video with artificially generated content

- Category 3: Images with medical content

For the description and retrieval of multi-media material with natural content, 4 descriptors were developed:

- CEDD - Color and Edge Directivity Descriptor

- C.CEDD - Compact Color and Edge Directivity Descriptor

- FCTH - Fuzzy Color and Texture Histogram

- C.FCTH - Compact Fuzzy Color and Texture Histogram

The CEDD includes texture information produced by the six-bin histogram of a fuzzy system that uses the five digital filters proposed by the MPEG-7 EHD. Additionally, for color information the CEDD uses a 24-bin color histogram produced by the 24-bin fuzzy-linking system. Overall, the final histogram has 6 X 24=144 regions.

The FCTH descriptor includes the texture information produced in the eight-bin histogram of a fuzzy system that uses the high frequency bands of the Haar wavelet transform. For color information, the descriptor uses a 24-bin color histogram produced by the 24-bin fuzzy-linking system. Overall, the final histogram includes 8 X 24=192 regions.

The method for producing the C.CEDD differs from the CEDD method only in the color unit. The C.CEDD uses a fuzzy ten-bin linking system instead of the fuzzy 24-bin linking system. Overall, the final histogram has only 6 X 10=60 regions. Compact CEDD is the smallest descriptor of the proposed set requiring less than 23 bytes per image.

The method for producing C.FCTH differs from the FCTH method only in the color unit. Like its C.CEDD counterpart, this descriptor uses only a fuzzy ten-bin linking system instead of the fuzzy 24-bin linking system. Overall, the final histogram includes only 8 X 10=80 regions.

To restrict the proposed descriptors' length, the normalized bin values of the descriptors are quantized for binary representation in a three bits/bin quantization.

Experiments conducted on several benchmarking image databases demonstrate the effectiveness of the proposed descriptors in outperforming the MPEG-7 Descriptors as well as other state-of-the-art descriptors from the literature. These descriptors are described in details in Chapter 5.

Chapter 6 describes the Spatial Color Distribution Descriptor (SpCD). This descriptor combines

color and spatial color distribution information. Since these descriptors capture the layout information of color features, they can be used for image retrieval by using hand-drawn sketch queries. In addition, the descriptors of this structure are considered to be suitable for colored graphics, since such images contain relatively small number of color and less texture regions than the natural color images. This descriptor uses a new fuzzy-linking system, that maps the colors of the image in a custom 8 colors palette.

The rapid advances made in the field of radiology, the increased frequency in which oncological diseases appear, as well as the demand for regular medical checks, led to the creation of a large database of radiology images in every hospital or medical center. There is now the imperative need to create an effective method for the indexing and retrieval of these images. Chapter 7 describes a new method of content based radiology medical image retrieval using the Brightness and Texture Directionality Histogram (BTDH). This descriptor uses brightness and texture characteristics as well as the spatial distribution of these characteristics in one compact 1D vector. The most important characteristic of the proposed descriptor is that its size adapts according to the storage capabilities of the application that is using it.

The requirements of the modern retrieval systems are not limited to the achievement of good retrieval results, but extend to their ability for quick results. The majority of the Internet users would accept a reduction in the accuracy of the results in order to save time from searching. The third thematic unit describes how the proposed descriptors may be modified, in order to achieve a faster retrieval from databases. Test results indicate that the developed descriptors are in a position to execute retrieval of approximately 100,000 images per second, regardless of dimensions. Details on the method developed are given in Chapter 8.

In Chapter 9 the procedure of early fusion of the two descriptors which describe visual multi-media material with natural content, is described. Given the fact that this category includes more than one descriptors, the procedure for combining these descriptors in order to further improve on the retrieval results, is analyzed.

The proposed descriptors are capable of describing images with a specific content. The descriptors developed for use with images with natural content cannot be used to retrieve grayscale medical images and vice versa. Due to this, the calculation of the efficiency of each descriptor was employed using image databases with homogenous content, suitable for the specific descriptor. However, the databases mostly used in the Internet are heterogeneous, and include images from every category. The fourth thematic unit of this thesis describes how late fusion techniques can be used to combine all the proposed descriptors, in order to achieve high retrievals scores in databases of this kind. Linear and non linear methods, which were adopted from the information retrieval field, have proven that the combination of descriptors yields very satisfactory results when used in heterogeneous data bases.

In the same, fourth, thematic unit a retrieval scenario from distributed image databases is considered. In this scenario, the user executes a search in multiple databases. However, it is possible that each database uses its own descriptor(s) for the images it contains. Adopting once more methods from the information retrieval field and combining them with a method developed in this thesis it is possible to achieve high retrieval scores. Details on the fusion methods, as well as the retrieval methods from distributed image databases, are given in Chapter 10.

Finally, the fourth thematic unit is completed by a relevance feedback algorithm. The aim of the proposed algorithm is to better readjust or even to alter the initial results of the retrieval, based on user preferences. During this process, the user selects from the retrieved results those images which are similar to his/her expected results. Information extracted from these images is in the sequel used to alter the descriptor of the query image. The method is described in Chapter 11.

The fifth part of the thesis describes the implementation of the four prior parts into free and open source software packages. During the course of the thesis, 3 software packages were developed:

- Software 1: Img(Rummager)

- Software 2: Img(Anaktisi)

- Software 3: LIRe

Img(Rummager) was employed for the demonstration of the results of the research carried out in this thesis. In addition to the developed descriptors, the software implements a large number of descriptors from the literature (including the MPEG-7 descriptors), so that the application constitutes a platform for retrieving images via which the behavior of a number of descriptors can be studied. The application can evaluate the retrieval results, both via the use of the new image retrieval evaluation method as well as via MAP and ANMRR. The application was programmed using C# and is freely available via the ``Automatic Control, Systems and Robotics Laboratory'' webpage, Department of Electrical and Computer Engineering, Democritus University of Thrace.

Img(Anaktisi) was developed in collaboration with``Electrical Circuit Analysis Laboratory'', Department of Electrical and Computer Engineering, Democritus University of Thrace, and is an Internet based application which possesses the capability of executing image retrieval using the proposed in these thesis tools in a large number of images. The application is programmed in C\# .

Moreover, the proposed descriptors were included into the free and open source library, LiRE. This library is programmed in JAVA and includes implementations for the most important descriptors used for image retrieval. The program was developed in collaboration with the ALPEN-ADRIA University of Information Technology in Klagenfurtm Austria, Distributed Multimedia Systems Research Group (Ass. Prof. M. Lux). Details on the developed software are given in Chapter 12.

The sixth thematical unit of the thesis presents some of the applications which were developed via the use of the method which was employed during the thesis research. Initially, a system of image encryption was developed, which adopts methods from the field of image retrieval. The proposed method employs cellular automata and image descriptors in order to ensure the safe transfer of images and video. Chapter 13 describes the method.

In collaboration with ``Electrical Circuit Analysis Laboratory'', Department of Electrical and Computer Engineering, Democritus University of Thrace, an automated image annotation system was developed, using support vector machines. The combination of descriptors characterizes the image content with one or more words from a predetermined dictionary. Both the developed system, as well as the details regarding the method are given in Chapter 14.

In addition, a system which combines all the descriptors from the second thematical unit, as well as the fusion methods of the fourth thematic unit, was developed in order to create automated video summaries. The method utilized fuzzy classificators in order to create a summary in a predetermined class number, with the unique attribute of multiple participation of each frame in each class. Details are given in Chapter 15.

For the purposes of this thesis, an application was developed, which combines the proposed descriptors with high level features. Specifically, an application was developed which combines the visual content of 250,000 images from the Wikipedia, with the tags which accompany the images, as well as with the content of articles in which these images can be found. In reality, this problem is a fusion problem with multiple modalities and is described in Chapter 16.

The MPEG-7 standard proposed a structure via which visual-acoustic multi-media data bases are described. Each database is described via an XML file which contains the information for each image for a standardized format. This structure allows other applications to expand their structure by adding new fields. The first appendix of the thesis analyzes how the developed descriptors can be incorporated into the standardized MPEG-7 format.

Saturday, November 27, 2010

Hessian Optimal Design for image retrieval

Ke Lu, Jidong Zhao and Yue Wu

Available online 19 November 2010.

Abstract

Recently there has been considerable interest in active learning from the perspective of optimal experimental design (OED). OED selects the most informative samples to minimize the covariance matrix of the parameters so that the expected prediction error of the parameters, as well as the model output, can be minimized. Most of the existing OED methods are based on either linear regression or Laplacian regularized least squares (LapRLS) models. Although LapRLS has shown better performance than linear regression, it suffers from the fact that the solution is biased towards a constant and the lack of extrapolating power. In this paper, we propose a novel active learning algorithm called Hessian Optimal Design (HOD). HOD is based on the second-order Hessian energy for semi-supervised regression which overcomes the drawbacks of Laplacian based methods. Specifically, HOD selects those samples which minimize the parameter covariance matrix of the Hessian regularized regression model. The experimental results on content-based image retrieval have demonstrated the effectiveness of our proposed approach.

Keywords: Active learning; Regularization; Image retrieval

Introduction to Content Based Image Retrieval Tutorial

Introduction to Content Based Image Retrieval by Bin Shen

Available at http://khup.com/view/18_keyword-image-vectorize/introduction-to-content-based-image-retrieval.html

Tuesday, November 23, 2010

20 Must-See TED Speeches for Computer Scientists

Article from http://www.mastersincomputerscience.com/20-must-see-ted-speeches-for-computer-scientists/

TED is a nonprofit organization that has dedicated itself to the concept that good ideas are worth spreading. To promote these great ideas, TED (which stands for Technology, Entertainment, Design) has a yearly conference in Long Beach, California. While the conference is too expensive for most people to attend, at $6000 per person, TED has used the Internet to share talks from the conference since 2006.

These are free of charge to the public and an amazing resource for professionals in many fields. Computer scientists will find these 20 TED speeches (not ranked in any particular order) informative, challenging, and stimulating. Maybe an idea worth spreading will help you make the connection you’ve been looking for in your own computer science research.

1. George Dyson at the birth of the computer | Video on TED.com

Have you ever heard the real stories about the dawn of computing? Sure, you have probably memorized important dates and can talk about things like vacuum tubes and punch cards, but do you really know what went on at the beginning? In this enlightening talk, computer historian George Dyson reveals fascinating and sometimes funny anecdotes about the beginning of computing.

2. Kwabena Boahen on a computer that works like the brain | Video on TED.com

Kwabena Boahen’s team at Stanford is working on computer technology that works less like a computer usually does, and more like the human brain does. He discusses the inefficiencies associated with traditional computing and ways that they might be overcome using reverse engineering of the human nervous system.

3. Jeff Han demos his breakthrough touchscreen | Video on TED.com

Jeff Han’s incredible speech from 2006 shows the future of touch-screen interfaces. His scalable, multi-touch, pressure-sensitive interface allows people to use the computer without the barriers of having to point and click all the time.

4. Paul Debevec animates a photo-real digital face | Video on TED.com

Paul Debevec explains the process behind his animation of Digital Emily, a hyper-realistic character. Digital Emily is based on a real person named Emily, and created using an advanced 360-degree camera setup.

5. http://www.ted.com/talks/stephen_wolfram_computing_a_theory_of_everything.html

In this mind-boggling speech, Stephen Wolfram, a world-renowned leader in scientific computing, discusses his life’s mission. He wants to make all of the knowledge in the world computational. His new search engine, Wolfram|Alpha, is designed to take all of the available information on the web and make instant computations of that information accessible to the world.

6. Dennis Hong: My seven species of robot

As artificial intelligence technology continues to evolve, the quest continues to create robots that are truly useful on a scientific and on an ordinary, day-to-day level. Dennis Hong’s RoMeLa lab at Virginia Tech presents seven distinctly different robots in this talk. Make sure you watch all the way to the end to discover his secrets of creativity.

7. http://www.ted.com/talks/gary_flake_is_pivot_a_turning_point_for_web_exploration.html

Gary Flake believes that the whole of all data we have is greater than the sum of its parts. He and the staff at Microsoft Live Labs have created a fascinating new tool called Pivot that enables people to browse the web not by going from page to page, but by looking at large patterns all at once.

Monday, November 15, 2010

Relative status of journal and conference publications in computer science

Though computer scientists agree that conference publications enjoy greater status in computer science than in other disciplines, there is little quantitative evidence to support this view. The importance of journal publication in academic promotion makes it a highly personal issue, since focusing exclusively on journal papers misses many significant papers published by CS conferences.

Here, we aim to quantify the relative importance of CS journal and conference papers, showing that CS papers in leading conferences match the impact of papers in mid-ranking journals and surpass the impact of papers in journals in the bottom half of the Thompson Reuters rankings (http://www.isiknowledge.com) for impact measured in terms of citations in Google Scholar. We also show that poor correlation between this measure and conference acceptance rates indicates conference publication is an inefficient market where venues equally challenging in terms of rejection rates offer quite different returns in terms of citations.

How to measure the quality of academic research and performance of particular researchers has always involved debate. Many CS researchers feel that performance assessment is an exercise in futility, in part because academic research cannot be boiled down to a set of simple performance metrics, and any attempt to introduce them would expose the entire research enterprise to manipulation and gaming. On the other hand, many researchers want some reasonable way to evaluate academic performance, arguing that even an imperfect system sheds light on research quality, helping funding agencies and tenure committees make more informed decisions.

One long-standing way of evaluating academic performance is through publication output. Best practice for academics is to write key research contributions as scholarly articles for submission to relevant journals and conferences; the peer-review model has stood the test of time in determining the quality of accepted articles. However, today's culture of academic publication accommodates a range of publication opportunities yielding a continuum of quality, with a significant gap between the lower and upper reaches of the continuum; for example, journal papers are routinely viewed as superior to conference papers, which are generally considered superior to papers at workshops and local symposia. Several techniques are used for evaluating publications and publication outlets, mostly targeting journals. For example, Thompson Reuters (the Institute for Scientific Information) and other such organizations record and assess the number of citations accumulated by leading journals (and some high-ranking conferences) in the ISI Web of Knowledge (http://www.isiknowledge.com) to compute the impact factor of a journal as a measure of its ability to attract citations. Less-reliable indicators of publication quality are also available for judging conference quality; for example, a conference's rejection rate is often cited as a quality indicator on the grounds that a high rejection rate means a more selective review process able to generate higher-quality papers. However, as the devil is in the details, the details in this case vary among academic disciplines and subdisciplines.

Here, we examine the issue of publication quality from a CS/engineering perspective, describing how related publication practices differ from those of other disciplines, in that CS/engineering research is mainly published in conferences rather than in journals. This culture presents an important challenge when evaluating CS research because traditional impact metrics are better suited to evaluating journal rather than conference publications.

In order to legitimize the role of conference papers to the wider scientific community, we offer an impact measure based on an analysis of Google Scholar citation data suited to CS conferences. We validate this new measure with a large-scale experiment covering 8,764 conference and journal papers to demonstrate a strong correlation between traditional journal impact and our new citation score. The results highlight how leading conferences compare favorably to mid-ranking journals, surpassing the impact of journals in the bottom half of the traditional ISI Web of Knowledge ranking. We also discuss a number of interesting anomalies in the CS conference circuit, highlighting how conferences with similar rejection rates (the traditional way of evaluating conferences) can attract quite different citation counts. We also note interesting geographical distinctions in this regard, particularly with respect to European and U.S. conferences.

@article{Freyne:2010:RSJ:1839676.1839701,

author = {Freyne, Jill and Coyle, Lorcan and Smyth, Barry and Cunningham, Padraig},

title = {Relative status of journal and conference publications in computer science},

journal = {Commun. ACM},

volume = {53},

issue = {11},

month = {November},

year = {2010},

issn = {0001-0782},

pages = {124--132},

numpages = {9},

url = {http://doi.acm.org/10.1145/1839676.1839701},

doi = {http://doi.acm.org/10.1145/1839676.1839701},

acmid = {1839701},

publisher = {ACM},

address = {New York, NY, USA},

}

Sunday, November 14, 2010

Elsevier Releases Image Search; New SciVerse ScienceDirect Feature

Elsevier a leading publisher of scientific, technical and medical (STM) information, today announced the availability of Image Search, a new SciVerse ScienceDirect (http://www.sciencedirect.com) feature that enables users to quickly and efficiently find images and figures relevant to their specific research objectives.

The new feature allows researchers to search across more than 15 million images contained within SciVerse ScienceDirect. Results include tables, photos, figures, graphs and videos from trusted peer-reviewed full text sources. Saving researchers significant time, Image Search ensures increased efficiency in finding the relevant visuals.

Search results can be refined by image type and contain links to the location within the original source article, allowing researchers to verify image in context. Researchers can take advantage of Image Search to learn new concepts, prepare manuscripts or visually convey ideas in presentations and lectures. the new feature is available to SciVerse ScienceDirect subscribed

users at no additional cost.

“By allowing researchers to delve immediately into the images on SciVerse ScienceDirect, the new feature saves researchers valuable time and offers immediate access to a vast database of trusted visual content,” said Rafael Sidi, Vice President of Product Management for Elsevier’s SciVerse ScienceDirect. “Developed in response to user feedback, Image Search represents another example of the steps Elsevier is taking to speed scientific search and discovery by improving researcher workflow.”

Saturday, November 13, 2010

All-seeing eye for CCTV surveillance

Reported by Duncan Graham-Rowe 09 November 2010 in New Scientist

In some cities, CCTV is a victim of its own success: the number of cameras means there is not enough time to review the tapes. That could change, with software that can automatically summarise the footage.

"We take different events happening at different times and show them together," says Shmuel Peleg of BriefCam based in Neve Ilan, Israel, a company he co-founded based on his work at the Hebrew University of Jerusalem. "On average an hour is summarised down to a minute."

The software relies on the fact that most surveillance cameras are stationary and so record a static background. This makes it possible for the software to detect and extract sections of the video when anything moving enters the scene. All events occurring in a given time period, say an hour, are then superimposed to create a short video that shows all of the action at once (see video).

If something of interest is spotted while watching the summary, the operator can click on it to jump straight to the relevant point in the original CCTV footage. BriefCam's software has been shortlisted as a finalist for the 2010 Global Security Challenge prize at its conference in London this week.

Thursday, November 11, 2010

CAIP2011

CAIP2011 is the fourteenth in the CAIP series of biennial international conferences devoted to all aspects of Computer Vision, Image Analysis and Processing, Pattern recognition and related fields. CAIP2011 will be held in August 29-31, 2011, in Seville (Spain). The last CAIP conferences were held in Groningen (2003), Paris (2005), Vienna (2007) and Münster (2009). CAIP2011 will be hosted by Seville University. The scientific program of the conference will consist of several keynote addresses, high quality papers selected by the international program committee and presented in a single track. Poster presentations will allow expert discussions of specialized research topics.

he scope of CAIP'11 includes, but not limited to, the following areas:

- 3D Vision

- 3D TV

- Biometrics

- Color and texture

- Document analysis

- Graph-based Methods

- Image and video indexing and database retrieval

- Image and video processing

- Image-based modeling

- Kernel methods

- Medical imaging

- Mobile multimedia

- Model-based vision approaches

- Motion Analysis

- Non-photorealistic animation and modeling

- Object recognition

- Performance evaluation

- Segmentation and grouping

- Shape representation and analysis

- Structural pattern recognition

- Tracking

- Applications

Publication:

The conference proceedings will be published in the Springer LNCS series.

A special issue with selected papers in Pattern Recognition will be published in Pattern Recognition Letters.

A special issue with selected papers in Analysis of Images will be published in Journal of Mathematical Imaging and Vision .

Monday, November 8, 2010

MediaEval Benchmarking Initiative

MediaEval is a benchmarking initiative dedicated to evaluating new algorithms for multimedia access and retrieval. MediaEval focuses on speech, language and contextual aspects of video (geographical and social context). Typical tasks include, predicting tags for video, both user-assigned tags (Tagging Task) and geo-tags (Placing Task). We are daring enough to tackle "subjective" aspects of video (Affect Task).

MediaEval is a benchmarking initiative dedicated to evaluating new algorithms for multimedia access and retrieval. MediaEval focuses on speech, language and contextual aspects of video (geographical and social context). Typical tasks include, predicting tags for video, both user-assigned tags (Tagging Task) and geo-tags (Placing Task). We are daring enough to tackle "subjective" aspects of video (Affect Task).

How can I get involved?

MediaEval is an open initiative, meaning that any interested research group is free to signup and participate. Signup for MediaEval 2011 will open in early Spring 2011 on this website. Groups sign up for one or more tasks, they then receive task definitions, data sets and supporting resources, which they use to develop their algorithms. At the end of the summer, groups submit their results and in the fall they attend the MediaEval workshop. More information on MediaEval is available on theFAQ page (see also Why Participate?)

Which tasks will be offered in 2011?

The task offering in 2011 will be finalized on the basis of participant interest, assessed, as last year, via a survey. At this time, we anticipate that we will run a Tagging Task and Placing Task (see MediaEval 2010 for descriptions of the form these tasks took in 2010). We also anticipate offering an additional task involving ad hoc video retrieval and one involving violence detection. Please watch this page for additional information to appear at the beginning of 2011 or contact the organizers for information on the current status of plans.

Beyond plain sight?

We are interested in pushing video retrieval research beyond the pictorial content of video. In other words, we want to get past what we see in "plain sight" when watching a video and instead also analyze video content in terms of its overall subject matter (what it is actually about) and the effect that it has on viewers. This goal is consistent with our emphasis on speech, language and context features (geographical and social context).

There's a MediaEval trailer?

Yes. In the MediaEval 2010 Affect Task, we analyzed a travelogue series created by Bill Bowles, filmmaker and traveling video blogger. We were looking for elements that could be used to automatically predict whether, overall, a general audience would find a particular episode engaging or boring. Bill attended the MediaEval 2010 workshop in Pisa, listened to our findings and created a travelogue episode for us. In this video, Bill tells the story of MediaEval in his own words...and makes conscious use of some of the predictors we found to correlate with viewer engagement.

Wednesday, November 3, 2010

Facial recognition security to keep handset data safe

Reported By Stewart Mitchell in PCPro – Article from

http://gsirak.ee.duth.gr/index.php/archives/163

Mobile phone security could come face-to-face with science fiction following a demonstration of biometric technology by scientists at the University of Manchester.

Mobile phone security could come face-to-face with science fiction following a demonstration of biometric technology by scientists at the University of Manchester.

Mobile phones carry an ever-increasing level of personal data, but PIN-code log-ins can prove weak – if even applied – leaving those details vulnerable if a handset is stolen.

Facial recognition software could change that picture drastically.

“The idea is to recognise you as the user, and it does that by first taking a video of you, so it has your voice and lots of images for comparison,” said Phil Tresadern, lead researcher on the project.

Before letting anyone access the handset, the software examines an image of the person holding the handset, cross-referencing 22 geographical landmarks on the face with that of the owner.

“We had to change some of the floating point calculations to fixed points with whole numbers to make it more efficient, but it ported across quite easily“

“Existing mobile face trackers give only an approximate position and scale of the face,” said Tresadern. “Our model runs in real time and accurately tracks a number of landmarks on and around the face such as the eyes, nose, mouth and jaw line.”

The scientists claim their method is unrivalled for speed and accuracy and works on standard smartphones with front-facing cameras.

Facial recognition technology is nothing new, but squeezing something so computationally complicated onto a smartphone is quite an achievement.

“We have a demo that we have shown to potential partners that is running on Linux on a Nokia N900,” said Tresadern, adding that his team had to streamline the software to make it run on a mobile phone.

“We had to change some of the floating point calculations to fixed points with whole numbers to make it more efficient,” he said. “But other than that it ported across quite easily.”

The Manchester team said the technology has already attracted interest from “someone interested in putting it into the operating system for Nokia” and that the software could be licensed by app developers for any mobile device.

Read more: Facial recognition security to keep handset data safe | Security | News | PC Pro http://www.pcpro.co.uk/news/security/362287/facial-recognition-security-to-keep-handset-data-safe#ixzz13aZsL2mn

Tuesday, November 2, 2010

The IASTED International Conference on Signal and Image Processing and Applications ~SIPA 2011~

http://www.iasted.org/conferences/cfp-738.html

CONFERENCE CHAIR

Prof. Ioannis Andreadis

Democritus University of Thrace, Greece

LOCATION

Situated in the warm and sunny Mediterranean, Crete is the largest of the Greek islands. It is renowned in myth and rich in a history that spans thousands of years. The centre of the ancient Minoan civilisation, Crete is the backdrop to the dramatic legend of the Minotaur. Crete's history is intertwined with struggles for regional dominance; over centuries, Crete has been ruled by different peoples, including the Venetians, the Ottoman Turks, the Romans, and the Byzantines, all of whom of have left their mark on the island.

Today, history and myth blend seamlessly with Crete's natural beauty and lively culture. Take in the sharp mountain ranges, interrupted by steep ravines and divided by fields of olive trees, all below a splendid sky. Spend a day exploring the wild cypress forests and keep an eye out for the kri-kri, the Cretan wild goat. A trip to the stunning White Mountains and the Samariá Gorge is a must, as are the many ruins and historic landmarks throughout the island. Unwind with a sunset stroll on the beach followed by a hearty meal at a local tavern, or an evening of vibrant city nightlife.

PURPOSE

The IASTED International Conference on Signal and Image Processing and Applications (SIPA 2011) will be an international forum for researchers and practitioners interested in the advances in and applications of signal and image processing. It is an opportunity to present and observe the latest research, results, and ideas in these areas. SIPA 2011 aims to strengthen relationships between companies, research laboratories, and universities. All papers submitted to this conference will be double blind evaluated by at least two reviewers. Acceptance will be based primarily on originality and contribution.

Monday, November 1, 2010

Orasis (ver. 1.1)

Orasis Image Processing Software (ver. 1.1) is now freely available for download at:

http://sites.google.com/site/vonikakis/software

You can improve you photos, easily, with just a few mouse clicks.

You can improve you photos, easily, with just a few mouse clicks.

Orasis is an experimental, biologically-inspired, image enhancement software, which employs the characteristics of the center-surround cells of the Human Visual System.

Many times the image captured by a camera and the image in our eyes are dramatically different. Especially when there are shadows or highlights in the same scene. In these cases our eyes can distinguish many details in the shadows or the highlights, while the image captured by the camera suffers from loss of visual information in these regions.

Orasis attempts to bridge the gap between "what you see" and "what the camera outputs". It enhances the shadow or the highlight regions of an image, while keeping intact all the correctly exposed ones. The final result is a lot closer to the human perception of the scene, than the original captured image, revealing visual information that otherwise wouldn't be available to the human observer. Additionally to the above, Orasis can correct low local contract, colors and noise.

The new version includes:

· JPEG compression support

· EXIF data support

· Keyboard shortcuts

· Decorrelation between brightness and local contrast enhancement

· Automatic Color Saturation

· Automatic check for Updates

· Improved memory management

· Improved Noise reduction algorithms

· Improved Automatic selection of parameters

· Improved Color Correction algorithm

· Improved Independent RGB mode

· Improved Interface

(Greek Version)

Η νέα έκδοση του λογισμικού Επεξεργασίας Εικόνας Orasis (εκδ. 1.1) είναι τώρα ελεύθερα διαθέσιμη για κατέβασμα από τη διεύθυνση:

http://sites.google.com/site/vonikakis/software Μπορείτε τώρα να βελτιώσετε τις φωτογραφίες σας, μόνο με μερικά κλικ.

Μπορείτε τώρα να βελτιώσετε τις φωτογραφίες σας, μόνο με μερικά κλικ.

Το Orasis είναι ένα πειραματικό, βιολογικά εμπνευσμένο, λογισμικό Επεξεργασίας Εικόνας, το οποίο χρησιμοποιεί τα χαρακτηριστικά των κυττάρων ανταγωνισμού κέντρου-περιφέρειας του Ανθρώπινου Οπτικού Συστήματος.

Πολλές φορές η εικόνα που καταγράφει η φωτογραφική μηχανή, και η εικόνα στα μάτια μας, διαφέρουν δραματικά. Ειδικά όταν στη σκηνή που φωτογραφίζουμε υπάρχουν σκιές ή ισχυρές φωτεινές πηγές. Στις περιπτώσεις αυτές, τα μάτια μας διακρίνουν πολύ περισσότερες λεπτομέρειες μέσα στις σκιάσεις και στους έντονους φωτισμούς. Αντίθετα, η εικόνα που μας δίνει η φωτογραφική μηχανή διαθέτει πολύ λιγότερες λεπτομέρειες, έχοντας εκτεταμένες σκοτεινές ή πολύ φωτεινές περιοχές.

Το Orasis προσπαθεί να γεφυρώσει το χάσμα μεταξύ «αυτού που βλέπουμε» και «αυτού που μας δίνει η φωτογραφική μηχανή». Βελτιώνει τις σκιασμένες ή τις πολύ φωτεινές περιοχές, ενώ ταυτόχρονα αφήνει ανέπαφες τις σωστές περιοχές της εικόνας. Το τελικό αποτέλεσμα είναι πολύ πιο κοντά σε αυτό που αντιλαμβάνεται ο άνθρωπος, όταν παρατηρεί τη σκηνή, αποκαλύπτοντας λεπτομέρειες οι οποίες διαφορετικά δε θα ήταν αντιληπτές. Επιπλέον, το Orasis, βελτιώνει την ισορροπία χρωμάτων, τη χαμηλή τοπική αντίθεση και το θόρυβο των φωτογραφιών.

Friday, October 29, 2010

Unlogo - The Corporate Identity Media Filter

Unlogo is a web service, FFMPEG plugin, and AfterEffects plugin that eliminates logos and other corporate signage from videos. On a practical level, it takes back your personal media from the corporations and advertisers. On a technical level, it is a really cool combination of some brand new OpenCV and FFMPEG functionality. On a poetic level, it is a tool for focusing on what is important in the record of your life rather than the ubiquitous messages that advertisers want you to focus on.

In short, Unlogo gives people the opportunity to opt out of having corporate messages permanently imprinted into the photographic record of their lives.

It's simple: you upload a video, and after your video is processed, you get an email with a link to the unlogofied version (example: http://vimeo.com/14531292). You can choose to have the logos simply blocked out with a solid color or replaced with other images, such as the disembodied head of the CEO of the company. This scheme is a bit ridiculous (which is kind of my style), but I like it because it literalizes the intrusion into the record of your life that these logos represent.

What is the money for?

The majority of the money will allow me to work on Unlogo for another 2 months. These is enough time to get the filter out of training mode and into active recognition mode.

Some will go towards paying off some of the debt from the money that I have already spent on programming help and server costs.

Some of the money (probably $1k-$1.5k) will be used to pay the consultants and additional programmers who have been helping to get the filter finished and running smoothly.

Some of the money ($300-$400) will go to the hoisting bills. The CPU power to run this filter publicly isn't cheap! I have 1 Amazon EC2 server where the filtering/encoding is done, another S3 bucket where the videos are stored, and a 3rd server to host the front-end.

http://www.kickstarter.com/projects/816924031/unlogo-the-corporate-media-filter-0

Friday, October 22, 2010

Best Paper Award–2nd in a Week!!!!

My paper with Yiannis Boutalis and Avi Arampatzis

“ACCELERATING IMAGE RETRIEVAL USING BINARY HAAR WAVELET TRANSFORM ON THE COLOR AND EDGE DIRECTIVITY DESCRIPTOR"

“ACCELERATING IMAGE RETRIEVAL USING BINARY HAAR WAVELET TRANSFORM ON THE COLOR AND EDGE DIRECTIVITY DESCRIPTOR"

awarded as the BEST PAPER* at the “The Fifth International Multi-Conference on Computing in the Global Information Technology (ICCGI 2010)»,

Full Citation:

S. A. Chatzichristofis, Y.S. Boutalis and Avi Arampatzis, “ACCELERATING IMAGE RETRIEVAL USING BINARY HAAR WAVELET TRANSFORM ON THE COLOR AND EDGE DIRECTIVITY DESCRIPTOR”, «The Fifth International Multi-Conference on Computing in the Global Information Technology (ICCGI 2010)», Proceedings: IEEE Computer Society, pp. 41-47, September 20 to 25, 2010, Valencia, Spain

Abstracts:

In this paper, a new accelerating technique for content-based image retrieval is proposed, suitable for the Color and Edge Directivity Descriptor (CEDD). To date, the experimental results presented in the literature have shown that the CEDD demonstrates high rates of successful retrieval in benchmark image databases. Although its storage requirements are minimal, only 54 bytes per image, the time required for the retrieval procedure may be practically too long when searching on large databases. The proposed technique utilizes the Binary Haar Wavelet Transform in order to extract from the CEDD a smaller and more efficient descriptor, with a size of less than 2 bytes per image, speeding up retrieval from large image databases. This descriptor describes the CEDD, but not necessarily the image from which is extracted. The effectiveness of the proposed method is demonstrated through experiments performed with a known benchmark database.

*9 papers were awarded

Saturday, October 16, 2010

Best Paper Award

My paper with Vicky Kalogeiton, Dim Papadopoulos and Yiannis Boutalis

“A NOVEL VIDEO SUMMARIZATION METHOD BASED ON COMPACT COMPOSITE DESCRIPTORS AND FUZZY CLASSIFIER"

awarded as the BEST PAPER* at the “1st International Conference for Undergraduate and Postgraduate Students in Computer Engineering, Informatics, related Technologies and Applications”, October 14 to 15, 2010, Patra, Greece

Full Citation:

V. S. Kalogeiton, D. P. Papadopoulos, S. A. Chatzichristofis and Y. S. Boutalis, “A NOVEL VIDEO SUMMARIZATION METHOD BASED ON COMPACT COMPOSITE DESCRIPTORS AND FUZZY CLASSIFIER”, «1st International Conference for Undergraduate and Postgraduate Students in Computer Engineering, Informatics, related Technologies and Applications (EUREKA 2010)», October 14 to 15, 2010, Patra, Greece,

Abstract:

In this paper, a novel method to generate video summaries is proposed, which is allocated mainly for being applied to on-line videos. The novelty of this approach lies in the fact that the

authors of this paper transfer the video summarization problem to a single query image retrieval problem. This approach utilizes the recently proposed Compact Composite Descriptors (CCDs) and a fuzzy classifier. In particular, all the video frames are initially sorted according to the distance between an artificially generated, video depended, image. Then the ranking list is classified into a preset number of clusters using the Gustafson Kessel fuzzy classifier. The video abstract is calculated by extracting a representative key frame from every cluster. A significant characteristic of the proposed method is its ability to classify the frames of the video into one or more clusters. Experimental results are presented to indicate the effectiveness of the proposed approach.

Please note that both Vicky Kalogeiton and Dim Papadopoulos are undergraduate students and members of the DUTH EECE Robotics team. I feel totally honored, thank you :)

On the occasion of the award, I would like to congratulate all members of the DUTH EECE Robotics team for the high quality papers presented at the EUREKA 2010 conference.

*4 papers were awarded

Thursday, October 14, 2010

Limited-time FREE access: International Journal of Pattern Recognition and Artificial Intelligence (IJPRAI)

This journal publishes both applications and theory-oriented articles on new developments in the fields of pattern recognition and artificial intelligence, and is of interest to both researchers in industry and academia. From the beginning, there has always been a close relationship between the disciplines of pattern recognition and artificial intelligence. The recognition and understanding of sensory data like speech or images, which are major concerns in pattern recognition, have always been considered as important subfields of artificial intelligence.

As part of our contribution to the dissemination of scientific knowledge in the fields of Pattern Recognition and Artificial Intelligence, we have decided to extend a free access for the latest Volume of the journal. So sign up here and gain Free Access to the 2010 full text articles today!

Saturday, October 9, 2010

Greece: Funding Opportunities for Postdoctoral Research

The Greek Ministry of Education, Lifelong Learning and Religious Affairs invites all interested beneficiaries to submit proposal summaries for their inclusion in the Action "Support of Postdoctoral Researchers".

The Greek Ministry of Education, Lifelong Learning and Religious Affairs invites all interested beneficiaries to submit proposal summaries for their inclusion in the Action "Support of Postdoctoral Researchers".

The aim of this Action is to facilitate the acquirement of new research skills by Postdoctoral Researchers (PR) that will promote their career development in any field and/or help them restart their careers after a leave of absence (but no more than seven (7) years following their doctorate conferment date). Emphasis will be given to the support of new scientists at the beginning of their career. The duration of the research projects should range from 24 to 36 months. Each candidate can submit only one (1) research proposal. The total pubic cost of the present call is 30.000.000€ and it is co-financed by the ESF (European Social Fund). The maximum budget for each project is 150.000€. At least 60% of the total budget should be related to costs pertaining to activities undertaken by the PR and should include a monthly net allowance of 1.600€, subject to cost-of-living adjustments for different countries.

Read More or Direct contact me at savvash@gmail.com

Thursday, October 7, 2010

Final Call for Papers - ECIR 2011

3rd European Conference on Information Retrieval

Dublin, Ireland

18 - 21 April 2011

In cooperation with: BCS-IRSG, Dublin City University, University of Sheffield

http://ecir2011.dcu.ie

The 33rd European Conference on Information Retrieval (ECIR 2011) will take place in Dublin, Ireland from 18-21 April 2011. ECIR provides an opportunity for both new and established researchers to present research papers reporting new, unpublished, and innovative research results within Information Retrieval. ECIR has traditionally had a strong student focus and papers whose sole or main author is a postgraduate student or postdoctoral researcher are especially welcome. As an added incentive at ECIR2011, accepted full-papers whose first author is a student will be given the opportunity to be paired with a senior mentor who will interact with them during the course of the conference. Each mentor will be an expert in the relevant area of the student's work, and will provide questions and subsequent feedback after their presentation.

We are seeking the submission of high-quality and original full-length research papers, short-papers, posters and demos, which will be reviewed by experts on the basis of the originality of the work, the validity of the results, chosen methodology, writing quality and the overall contribution to the field of Information Retrieval. Papers that demonstrate a high level of research adventure or which break out of the traditional IR paradigms are particularly welcome.

Relevant topics include, but are not limited to:

- Enterprise Search, Intranet Search, Desktop Search, Adversarial IR

- Web IR and Web log analysis

- Multimedia IR

- Digital libraries

- IR Theory and Formal Models

- Distributed IR, Peer-to-peer IR,

- Mobile IR, Fusion/Combination

- Cross-language retrieval, Multilingual retrieval, Machine translation for IR

- Topic detection and tracking, Routing

- Content-based filtering, Collaborative filtering, Agents, Spam filtering

- Question answering, NLP for IR

- Summarization, Lexical acquisition

- Text Data Mining

- Text Categorization, Clustering

- Performance, Scalability, Architectures, Efficiency, Platforms

- Indexing, Query representation, Query reformulation,

- Structure-based representation, XML Retrieval

- Metadata, Social networking/tagging

- Evaluation methods and metrics, Experimental design, Test collections

- Interactive IR, User studies, User models, Task-based IR

- User interfaces and visualization

- Opinion mining, Sentiment Analysis

- Blog and online-community search

- Other domain-specific IR (e.g., Genomic IR, legal IR, IR for chemical structures)

The ECIR 2011 conference proceedings will be published by Springer in the Lecture Notes in Computer Science (LNCS) series. Authors for both long and short papers are invited to submit their paper on or before 15 October 2010. All paper submissions must be written in English following the LNCS author guidelines [http://www.springer.com/computer/lncs?SGWID=0-164-6-793341-0]. Full papers must not exceed 12 pages including references and figures, short-papers submissions should have a maximum length of 6 pages, and posters and demos must not be longer than 4 pages. All papers will be refereed through double-blind peer review so authors should take reasonable care not identify themselves in their submissions. The proceedings will be distributed to all delegates at the Conference.

Wednesday, October 6, 2010

CBMI 2010

Following the eight successful previous events of CBMI (Toulouse 1999, Brescia 2001, Rennes 2003, Riga 2005, Bordeaux 2007, London 2008, Chania 2009 and Grenoble 2010), the Video Processing and Understanding Lab (VPULab) and the Information Retrieval Group (IRG) at Universidad Autónoma de Madrid will organize the next CBMI event.

CBMI 2011 aims at bringing together the various communities involved in the different aspects of content-based multimedia indexing, retrieval, browsing and presentation. The scientific program of CBMI 2011 will include invited keynote talks and regular and special sessions with contributed research papers.

Topics of interest, grouped in technical tracks, include, but are not limited to:

Visual Indexing

- Visual indexing (image, video, graphics)

- Visual content extraction

- Identification and tracking of semantic regions

- Identification of semantic events

Audio and Multi-modal Indexing

- Audio indexing (audio, speech, music)

- Audio content extraction

- Multi-modal and cross-modal indexing

- Metadata generation, coding and transformation

Multimedia Information Retrieval

- Multimedia retrieval (image, audio, video, …)

- Matching and similarity search

- Content-based search

- Multimedia data mining

- Multimedia recommendation

- Large scale multimedia database management

Multimedia Browsing and Presentation

- Summarisation, browsing and organization of multimedia content

- Personalization and content adaptation

- User interaction and relevance feedback

- Multimedia interfaces, presentation and visualization tools

Selected papers will appear, after extension and peer-review, in a special issue of Multimedia Tools and Applications.