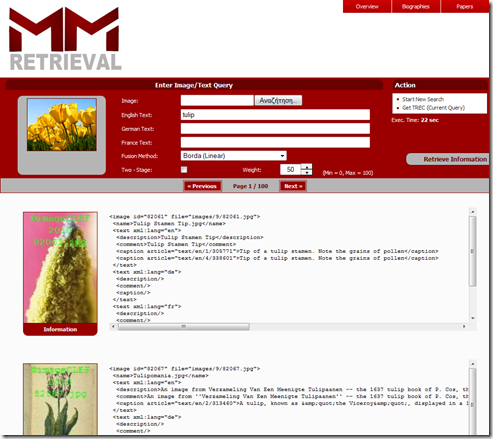

In this web application, we present an experimental multimodal search engine, which allows multimedia and multi-language queries, and makes use of the total available information in a multimodal collection. All modalities are indexed and searched separately, and results can be fused with different methods depending on

- the noise and completeness characteristics of the modalities in a collection

- whether the user is in a need of high initial precision or high recall

Beyond fusion, we also provide 2-stage retrieval by first thresholding the results obtained by secondary modalities targeting recall, and then re-ranking them based on the primary modality. The engine demonstrates the feasibility of the proposed architecture and methods on the ImageCLEF 2010 Wikipedia collection. Its primary modality is image, consisting of 237434 items, associated with noisy and incomplete user-supplied annotations and the Wikipedia articles containing the images. Associated modalities are written in any combination of English, German, French, or any other undetermined language.

No comments:

Post a Comment