ImageCLEF's Wikipedia Retrieval task provides a testbed for the system-oriented evaluation of visual information retrieval from a collection of Wikipedia images. The aim is to investigate retrieval approaches in the context of a large and heterogeneous collection of images (similar to those encountered on the Web) that are searched for by users with diverse information needs.

In 2010, ImageCLEF's Wikipedia Retrieval will use a new collection of over 237,000 Wikipedia images that cover diverse topics of interest. These images are associated with unstructured and noisy textual annotations in English, French, and German.

This is an ad-hoc image retrieval task; the evaluation scenario is thereby similar to the classic TREC ad-hoc retrieval task and the ImageCLEF photo retrieval task: simulation of the situation in which a system knows the set of documents to be searched, but cannot anticipate the particular topic that will be investigated (i.e. topics are not known to the system in advance). The goal of the simulation is: given a textual query (and/or sample images) describing a user's (multimedia) information need, find as many relevant images as possible from the Wikipedia image collection.

Any method can be used to retrieve relevant documents. We encourage the use of both concept-based and content-based retrieval methods and, in particular, multi modal and - new this year - multi lingual approaches that investigate the combination of evidence from different modalities and language resources.

ImageCLEF 2010 Wikipedia Collection

The ImageCLEF 2010 Wikipedia collection consists of 237,434 images and associated user-supplied annotations. The collection was built to cover similar topics in English, German and French. Topical similarity was obtained by selecting only Wikipedia articles which have versions in all three languages and are illustrated with at least one image in each version: 44,664 such articles were extracted from the September 2009 Wikipedia dumps, containing a total number of 265,987 images. Since the collection is intended to be freely distributed, we decided to remove all images with unclear copyright status. After this operation, duplicate elimination and some additional cleaning up, the remaining number of images in the collection is 237,434, with the following language distribution:

-English only: 70,127

-German only: 50,291

-French only: 28,461

-English and German: 26,880

-English and French: 20,747

-German and French: 9,646

-English, German and French: 22,899

-Language undetermined: 8,144

-No textual annotation: 239

The main difference between the ImageCLEF 2010 Wikipedia collection and the INEX MM collection (Westerveld and van Zwol, 2007) used in the previous WikipediaMM tasks is that the multilingual aspect has been reinforced and both mono- and cross-lingual evaluations can be carried out. Another difference is that this year, participants will receive for each image both its user-provided annotation and also links to the article(s) which contain the image. Finally, in order to encourage multi modal approaches, three types of low-level image features were extracted using PIRIA, CEA LIST's image indexing tool (Joint et al., 2004) and are provided to all participants.

(Joint et al., 2004) M. Joint, P.-A. Moëllic, P. Hède, P. Adam. PIRIA: a general tool for indexing, search and retrieval of multimedia content In Proceedings of SPIE, 2004.

(Westerveld and van Zwol, 2007) T. Westerveld and R. van Zwol. The INEX 2006 Multimedia Track. In N. Fuhr, M. Lalmas, and A. Trotman, editors, Advances in XML Information Retrieval:Fifth International Workshop of the Initiative for the Evaluation of XML Retrieval, INEX 2006, Lecture Notes in Computer Science/Lecture Notes in Artificial Intelligence (LNCS/LNAI). Springer-Verlag, 2007.

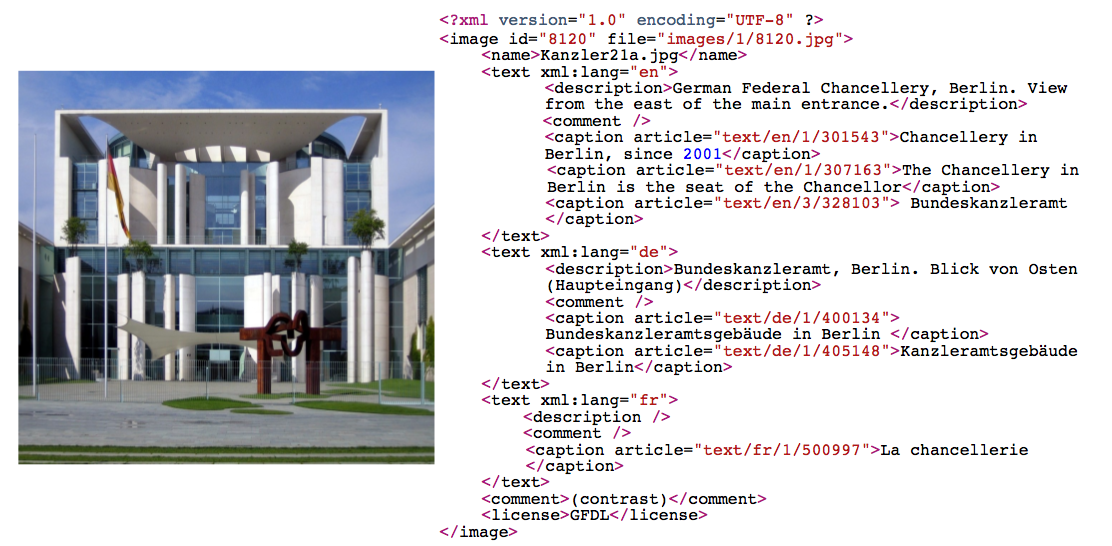

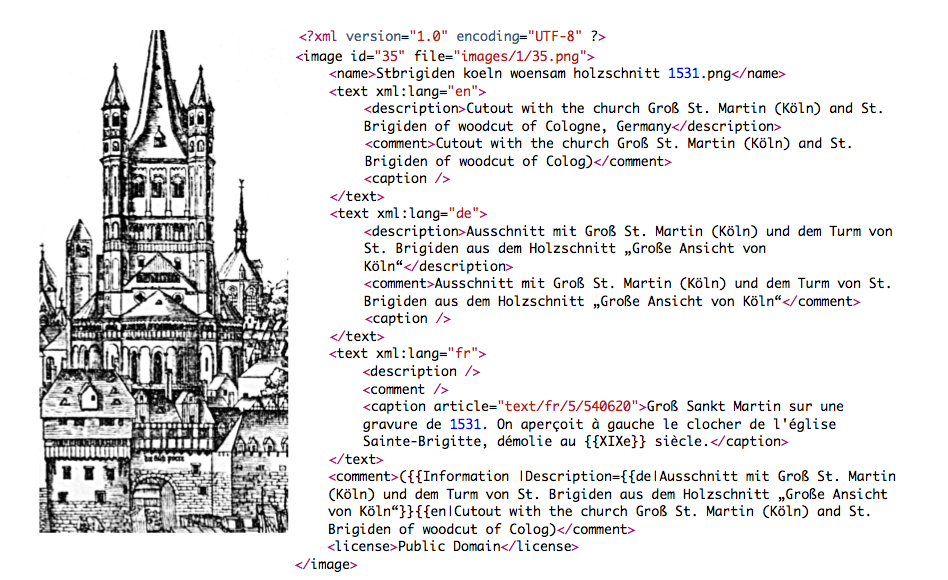

Two examples that illustrate the images in the collection and their metadata are provided below:

Evaluation Objectives

The characteristics of the new Wikipedia collection allow for the investigation of the following objectives:

- how well do the retrieval approaches cope with larger scale image collections?

- how well do the retrieval approaches cope with noisy and unstructured textual annotations?

- how well do the content-based retrieval approaches cope with images that cover diverse topics and are of varying quality?

- how well can systems exploit and combine different modalities given a user's multimedia information need? Can they outperform mono modal approaches like query-by-text, query-by-concept or query-by-image?

- how well can systems exploit the multiple language resources? Can they outperform mono-lingual approaches that use for example only the English text annotations?

The schedule can be found here:

- 15.2.2010: registration opens for all ImageCLEF tasks

- 30.3.2010: data release (images + metadata + article)

- 26.4.2010: topic release

- 15.5.2010: registration closes for all ImageCLEF tasks

- 11.6.2010: submission of runs

- 16.7.2010: release of results

- 15.8.2010: submission of working notes papers

- 20.09.2010-23.09.2010: CLEF 2010 Conference, Padova, Italy

No comments:

Post a Comment