ImageCLEF is the cross-language image retrieval track which is run as part of the Cross Language Evaluation Forum (CLEF) campaign. The ImageCLEF retrieval benchmark was established in 2003 with the aim of evaluating image retrieval from multilingual document collections. Images by their very nature are language independent, but often they are accompanied by texts semantically related to the image (e.g. textual captions or metadata). Images can then be retrieved using primitive features based on pixels with form the contents of an image (e.g. using a visual exemplar), abstracted features expressed through text or a combination of both. The language used to express the associated texts or textual queries should not a ect retrieval, i.e. an image with a caption written in English should be searchable in languages other than English.

ImageCLEF's Wikipedia Retrieval task provides a testbed for the systemoriented evaluation of visual information retrieval from a collection of Wikipedia images. The aim is to investigate retrieval approaches in the context of a large and heterogeneous collection of images (similar to those encountered on the Web) that are searched for by users with diverse information needs.

In 2010, ImageCLEF's Wikipedia Retrieval used a new collection of over 237000 Wikipedia images that cover diverse topics of interest. These images are associated with unstructured and noisy textual annotations in English, French, and German.

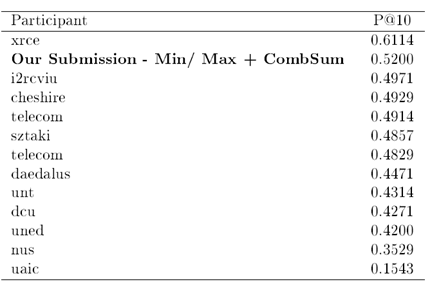

Based on the best run per group, as illustrated in the following Table, we are 2nd out of 13 in P@10:

Similarly, as illustrated in the following Table, based on the best run per group, we are 2nd out of 13 in P@20:

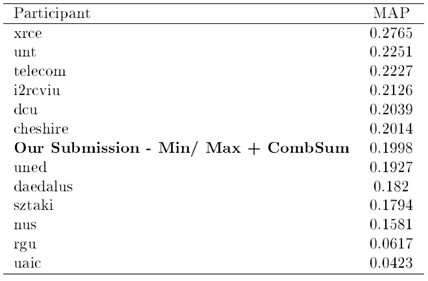

Overall, based on the best run per group, as illustrated in the following Table, we are 7th out of 13 in MAP:

More details about our fusion methods will be added soon

![jansen_en_detail[1] jansen_en_detail[1]](http://lh3.ggpht.com/_mEhPqpXDNKY/TEmM46CyDmI/AAAAAAAABQs/_Kj-tdFLar0/jansen_en_detail%5B1%5D_thumb%5B3%5D.png?imgmax=800)

![2[2] 2[2]](http://lh4.ggpht.com/_mEhPqpXDNKY/TEQq2O0PAgI/AAAAAAAABQA/b5OLYuOBiV0/2%5B2%5D_thumb%5B1%5D.jpg?imgmax=800)

![3[1] 3[1]](http://lh6.ggpht.com/_mEhPqpXDNKY/TEQq3KkFZhI/AAAAAAAABQI/DnLs4lcbA18/3%5B1%5D_thumb%5B1%5D.jpg?imgmax=800)

![4[1] 4[1]](http://lh3.ggpht.com/_mEhPqpXDNKY/TEQq4ATuBvI/AAAAAAAABQQ/zZofWqfmtyM/4%5B1%5D_thumb%5B1%5D.jpg?imgmax=800)

![1[2] 1[2]](http://lh6.ggpht.com/_mEhPqpXDNKY/TEQq5IJl32I/AAAAAAAABQY/RXHx-XFazTU/1%5B2%5D_thumb%5B1%5D.jpg?imgmax=800)