Original Article:http://multimediacommunication.blogspot.com/ by Christian Timmerer

The 97th MPEG meeting in Torino brought a few interesting news which I'd like to report here briefly. Of course, as usual, there will be an official press release to be published within the next weeks. However, I'd like to report on some interesting topics as follows:

- MPEG Unified Speech and Audio Coding (USAC) reached FDIS status

- Call for Proposals: Compact Descriptors for Visual Search (CDVS) !!!

- Call for Proposals: Internet Video Coding (IVC)

MPEG Unified Speech and Audio Coding (USAC) reached FDIS status

ISO/IEC 23003-3 aka Unified Speech and Audio Coding (USAC) reached FDIS status and soon will be an International Standard. The FDIS itself won't be publicly available but the Unified Speech and Audio Coding Verification Test Report in September 2011 (most likely here).

Call for Proposals: Compact Descriptors for Visual Search (CDVS)

I reported previously about that and here comes the final CfP including the evaluation framework.

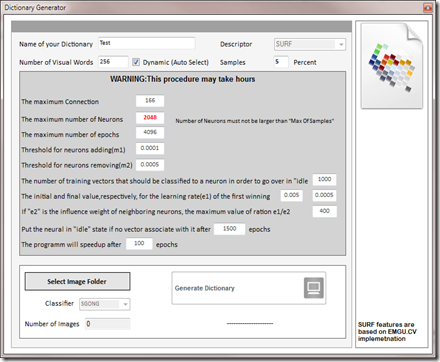

MPEG is planning standardizing technologies that will enable efficient and interoperable design of visual search applications. In particular we are seeking technologies for visual content matching in images or video. Visual content matching includes matching of views of objects, landmarks, and printed documents that is robust to partial occlusions as well as changes in vantage point, camera parameters, and lighting conditions.

There are a number of component technologies that are useful for visual search, including format of visual descriptors, descriptor extraction process, as well as indexing, and matching algorithms. As a minimum, the format of descriptors as well as parts of their extraction process should be defined to ensure interoperability.

It is envisioned that a standard for compact descriptors will:

- ensure interoperability of visual search applications and databases,

- enable high level of performance of implementations conformant to the standard,

- simplify design of descriptor extraction and matching for visual search applications,

- enable hardware support for descriptor extraction and matching in mobile devices,

- reduce load on wireless networks carrying visual search-related information.

It is envisioned that such standard will provide a complementary tool to the suite of existing MPEG standards, such as MPEG-7 Visual Descriptors. To build full visual search application this standard may be used jointly with other existing standards, such as MPEG Query Format, HTTP, XML, JPEG, JPSec, and JPSearch.

The Call for Proposals and the Evaluation Framework is publicly available. From a research perspective, it would be interesting to see how technologies submitted as an answer to the CfP compete with existing approaches and applications/services.

Call for Proposals: Internet Video Coding (IVC)

I reported previously about that and the final CfP for Internet Video Coding Technologies will be available around August 5th, 2011. However, you may have a look at the requirements already which can reveal some interesting issues the call will be about:

- Real-time communications, video chat, video conferencing,

- Mobile streaming, broadcast and communications,

- Mobile devices and Internet connected embedded devices

- Internet broadcast streaming, downloads

- Content sharing.

Requirements fall into the following major categories:

- IPR requirements

- Technical requirements

- Implementation complexity requirements

Clearly, this work item has an optimization towards IPR but others are not excluded. In particular,

It is anticipated that any patent declaration associated with the Baseline Profile of this standard will indicate that the patent owner is prepared to grant a free of charge license to an unrestricted number of applicants on a worldwide, non-discriminatory basis and under other reasonable terms and conditions to make, use, and sell implementations of the Baseline Profile of this standard in accordance with the ITU-T/ITU-R/ISO/IEC Common Patent Policy.

Further information you may find at the MPEG Web site, specifically under the hot newssection and the press release. Working documents of any MPEG standard so far can be found here. If you want to join any of these activities, the list of Ad-hoc Groups (AhG) is available here (soon also here) including the information how to join their reflectors.

Original Article:http://multimediacommunication.blogspot.com/ by Christian Timmerer