Recently, I found these new documents regarding the MPEG-7 - Multimedia Content Description Interface

Past decades have seen an exponential growth in usage of digital media. Early solutions to the management of these massive amounts of digital media fell short of expectations, stimulating intensive research in areas such as Content Based Image Retrieval (CBIR) and, most recently, Visual Search (VS) and Mobile Visual Search (MVS).

The field of Visual Search has been researched for more than a decade leading to recent deployments in the marketplace. As many companies are coming up with proprietary solutions to address the VS challenges, resulting in a fragmented technological landscape and a plethora of non-interoperable systems, MPEG introduces a new worldwide standard for the VS and MVS technology.

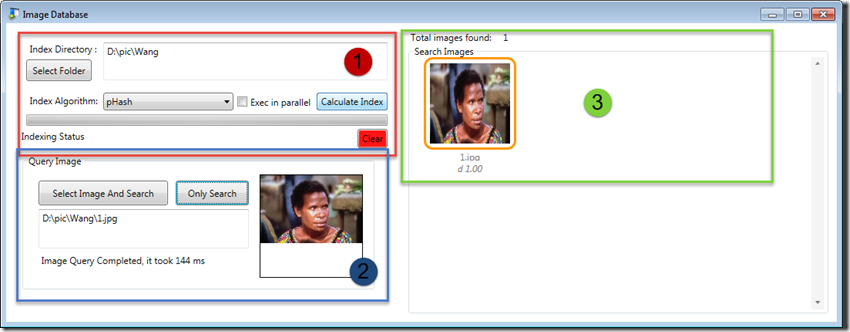

MPEG’s Compact Descriptors for Visual Search (CDVS) aims to standardize technologies, in order to enable an interoperable, efficient and cross-platform solution for internet-scale visual search applications and services.

The forthcoming CDVS standard is particularly important because it will ensure interoperability of visual search applications and databases, enabling high level of performance of implementations conformant to the standard, simplifying design of descriptor extraction and matching for visual search applications. It will also enable low complex, low memory hardware support for descriptor extraction and matching in mobile devices and sensibly reduce load on wireless networks carrying visual search-related information. All this will stimulate the creation of an ecosystem benefiting consumers, manufacturers, content and service providers alike.

- Reference Software

N15127, Text of ISO/IEC CD 15938-6:201X Reference software (2nd edition) - Compact Descriptors for Visual Search

N15129, Test Model 13: Compact Descriptors for Visual Search - Compact Descriptors for Visual Search

N15132, Announcement of CDVS Awareness Event - Compact Descriptors for Visual Search

N15128, Working draft 3 of CDVS Reference Software and Conformance Testing