Finally, the MATLAB implementation of CEDD is available on-line.

The source code is quite simple and easy to be handled by all users. There is a main function that has the task of extracting the CEDD descriptor from a given image.

Download the Matlab implementation of CEDD (For academic purposes only)

Few words about the CEDD descriptor:

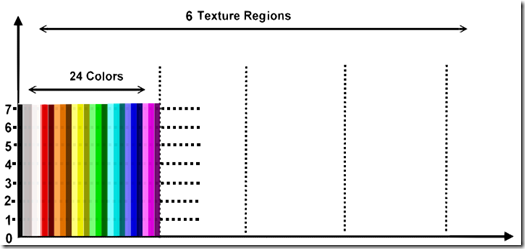

The descriptors, which include more than one features in a compact histogram, can be regarded that they belong to the family of Compact Composite Descriptors. A typical example of CCD is the CEDD descriptor. The structure of CEDD consists of 6 texture areas. In particular, each texture area is separated into 24 sub regions, with each sub region describing a color. CEDD's color information results from 2 fuzzy systems that map the colors of the image in a 24-color custom palette. To extract texture information, CEDD uses a fuzzy version of the five digital filters proposed by the MPEG-7 EHD. The CEDD extraction procedure is outlined as follows: when an image block (rectangular part of the image) interacts with the system that extracts a CCD, this section of the image simultaneously goes across 2 units. The first unit, the color unit, classifies the image block into one of the 24 shades used by the system. Let the classification be in the color $m, m \in [0,23]$. The second unit, the texture unit, classifies this section of the image in the texture area $a, a \in [0,5]$. The image block is classified in the bin $a \times 24 + m$. The process is repeated for all the image blocks of the image. On the completion of the process, the histogram is normalized within the interval [0,1] and quantized for binary representation in a three bits per bin quantization.

The most important attribute of CEDDs is the achievement of very good results that they bring up in various known benchmarking image databases. The following table shows the ANMRR results in 3 image databases. The ANMRR ranges from '0' to '1', and the smaller the value of this measure is, the better the matching quality of the query. ANMRR is the evaluation criterion used in all of the MPEG-7 color core experiments.

Download the Matlab implementation of CEDD (For academic purposes only)